Edge LoadBalancer service support based on MetalLB

背景介绍

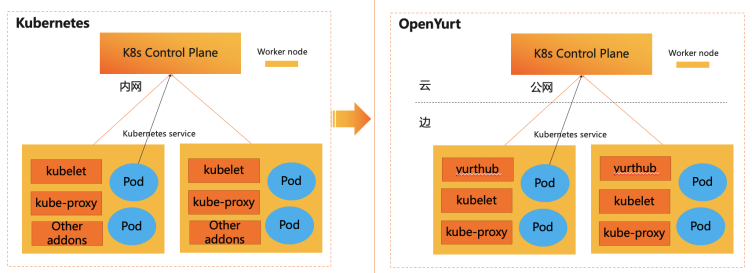

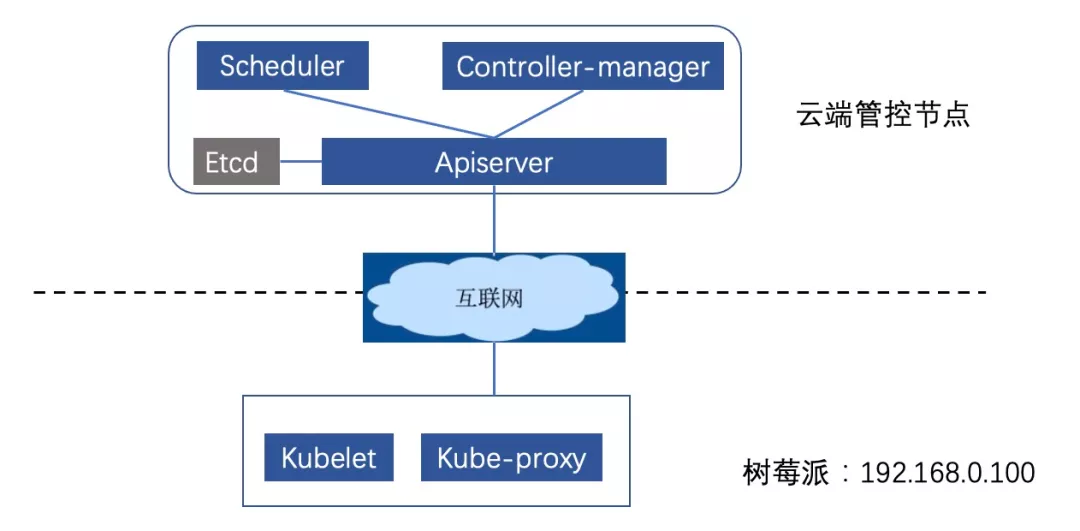

在云边协同场景下,虽然云端已经具备了较为全面的云原生能力,但边缘侧由于特定的网络环境及应用场景的限制,往往无法提供像云端一样丰富的能力,而实际上用户业务应用的主战场却在边缘侧, 这就导致边缘侧在使用云原生能力的时候或多或少存在一些gaps,而如何解决这些gaps也成为云边协同基础设施软件力求解决的关键问题。本文针对边缘侧服务暴露给集群外访问的场景,来探讨一下在 OpenYurt边缘侧解决上述问题的方法,希望能起到抛砖引玉的效果。

在原生Kubernetes集群中,如果想将服务暴露出来供集群外部访问,通常可以考虑以下几种方式:

- HostNetwork

- ExternalIPs

- NodePort

- LoadBalancer

- Ingress

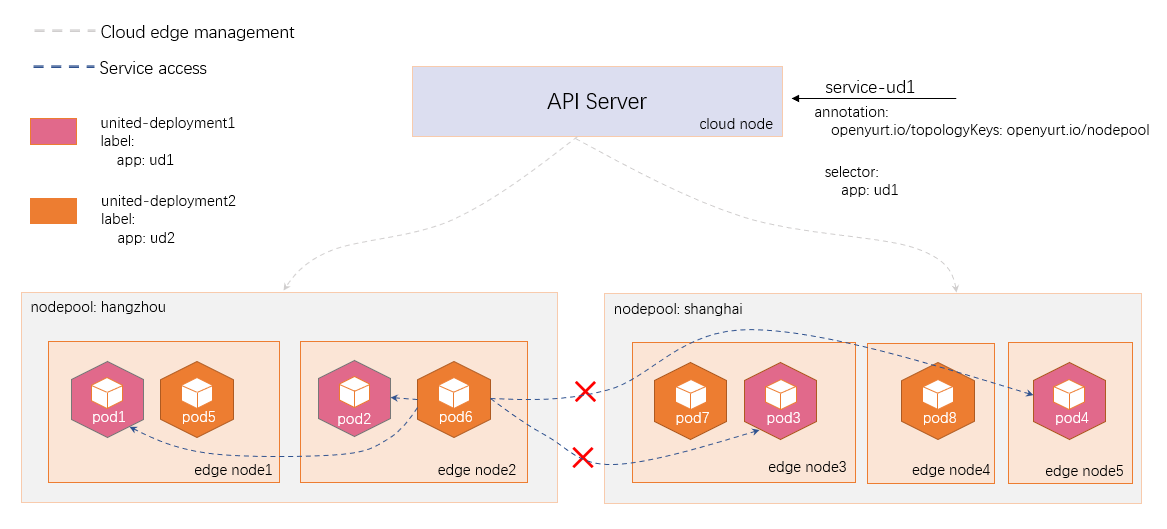

其中前三种方式,由于自身存在一定的局限性,在条件允许的情况下,通常用户更倾向于选择后两种方式。而对Ingress方式而言,云端Ingress功能通常会搭配云端SLB服务一起使用, SLB负责从用户请求到节点维度的负载均衡,而Ingress负责从节点到pod维度的负载均衡,这样就实现了从用户请求到应用pod的全链路的负载均衡功能。然而在云边协同场景下,由于边缘侧并不具备云端SLB服务的能力,边缘Ingress或边缘应用无法暴露LoadBalancer类型的服务供集群外访问,这也成为了边缘侧对外暴露服务的一大痛点。

为了解决上述痛点,目前在开源社区,有几个方案可供选择:MetalLB, PureLB和OpenELB。

- MetalLB: https://github.com/metallb

- PureLB: https://github.com/purelb

- OpenELB: https://github.com/openelb

关于三个项目之间的比较,可以从网上查到一些相关介绍,整体来说,它们实现的功能大同小异,从项目成熟度及流行度的角度考虑,我们选择以MetalLB为例,探讨一下如何通过MetalLB在OpenYurt 边缘侧实现对LoadBalancer类型service的支持。